· ux-research · 7 min read

Hawkeye - Eye tracking in hands?

A quick review at the app that could make eye tracking easier than ever.

Did eye tracking just got much easier?

If you’ve had a chance to read my previous article, you’ll be aware that carrying out Eye Tracking test usually implies some material constrains. So when a new application arrived on the market promising to simplify this type of test, we weren’t going to miss the opportunity to try it out.

Here’s our test of Hawkeye, the latest Eye Tracking application for mobile phones.

What is this app made for?

The basic operation of Hawkeye is not entirely new. It follows the same logic as Eye Tracking via webcam.

This type of Eye Tracking, initiated by the Xlab project, uses facial recognition algorithms that focus on the user’s eyes to determine the position of their gaze on a screen.

I had already been able to experiment with this type of solution… and found that its lack of precision meant that it could not be used in qualitative tests. And yet, with a computer, things are theoretically simpler than on a mobile phone. The webcam is fixed at eye level and the user stands straight in front of the screen. But on a mobile, it’s the other way round! When you hold a mobile phone in your hands, the movement is constant and comes as much from the user’s face as from their hands. The screen is also smaller, which means that eye tracking is much more complex.

So it’s no understatement to say that HawkEye Lab, the company founded by recent graduate Matt Moss, is preparing to take on quite a technical challenge. To do so, the application has a secret weapon: the use of the TrueDepth camera. This new model of front camera is available only on iPhones X, XS and XR, and is used to unlock the phone using facial recognition. As a logical consequence, the HawkEye app is only available for these 3 smartphones.

How does it work?

Campaign setup

I start by opening an application with a particularly sleek design. The interface is uncluttered and after a quick onboarding, the application asks me to create my first test campaign.

To do this, you can enter the URL of the site or image you want to test (in my case, that of the online retailer Asos.com) into the campaign. The application also lets you add an instruction text for your testers.

sessions are segmented per pages

In all, creating a campaign will take you no more than 2 minutes. All you have to do now is enter the name of your first participant and you’re ready to launch your first session.

Device calibration

I launched the first test by putting myself in the role of a user. The session begins with a gaze calibration stage. Although I found the application a little short on explanations, the step is nonetheless very simple to perform. First you need to fix your gaze on a central point, then on 4 points that appear on the extremities, then fix a final central point to complete the calibration. In my case, the operation only took about twenty seconds. Other testers will be less lucky, however, and some will have to repeat the process several times.

calibration stage

The next screen lets you test the calibration. I’m still on a page of the application and a cross indicating the zone where my gaze is fixed moves across the screen.

It’s at this stage that the first limitations of the application are revealed. The eye tracking was too imprecise (the cross had a nasty tendency to stick to the edges), so I had to repeat the calibration operation. After this 2nd attempt, although it wasn’t exact, the position of the cross seemed to be more faithful to my actual gaze and I decided to launch the test.

So here I am, on the Asos home page, which I scan before starting to navigate naturally between the different pages of the site. Only the crosshair that keeps following my gaze reminds me that I’m in a test situation.

After about 10 minutes of browsing I decided to end this first test session.

Recording Analysis

Once the session is over, the application automatically processes and sends the images to a server. For my first testers and myself, the operation was quick, but for one of my testers the download continued indefinitely until we were forced to close the application… losing the entire test session in the process! Not not cool :(

Apart from this disappointment, I have to admit that the post-session screen is a pleasant surprise.

The browsing session is split between the different pages consulted. This feature saves you a lot of time and makes it easy to compare several testers on the same page, even if the times spent are not identical.

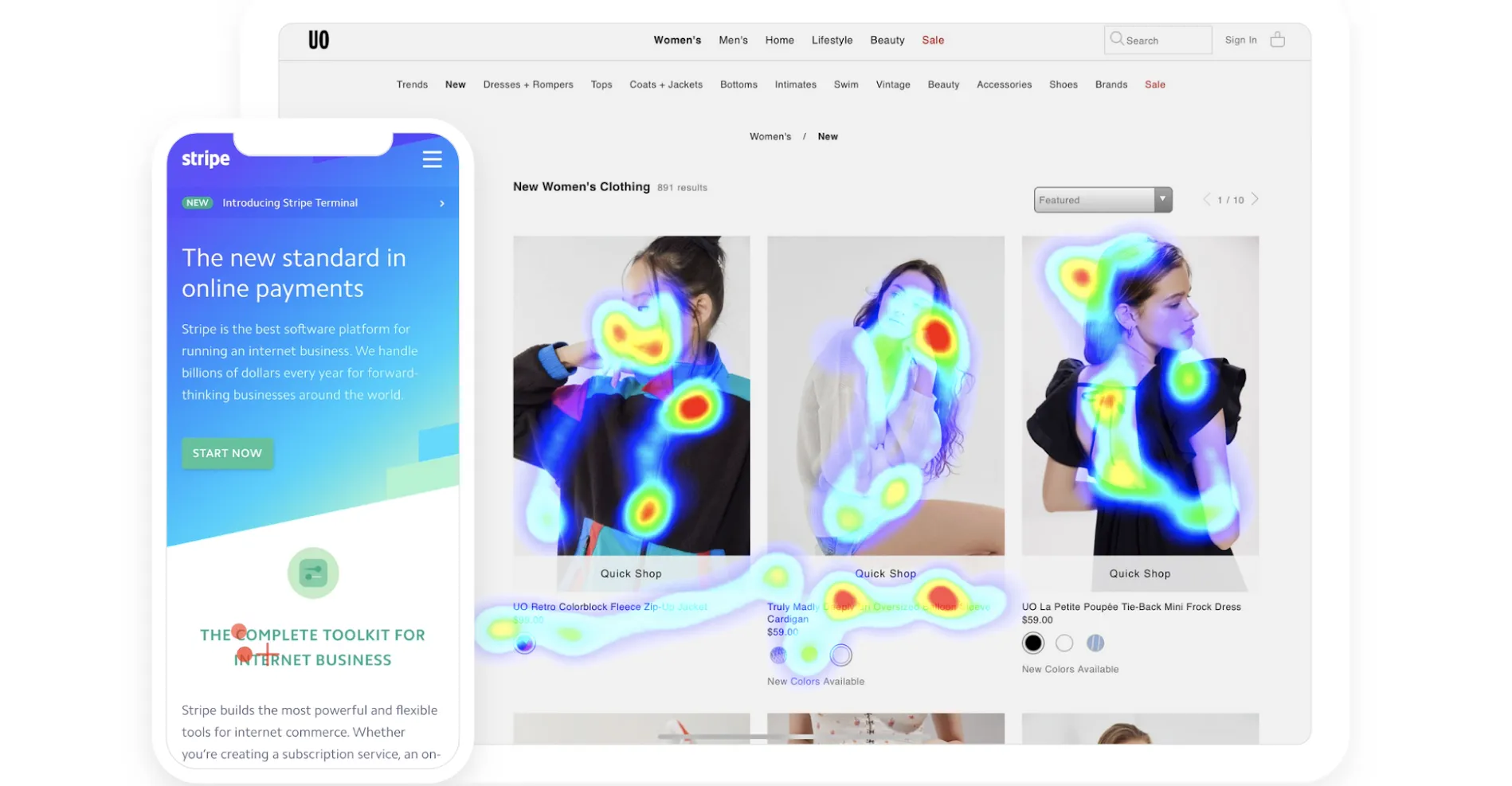

For each page consulted, the application displays the entire page (in the form of a full-size photo) and offers you the option of viewing the analysis in the form of a heatmap or Areas of Interest, two of the most commonly used representations in Eye Tracking.

Heatmap and Areas of Interest

Overall assessment

So after a few test sessions, what can we learn from the application?

First of all, its strong point: this solution is very non-invasive for testers. It requires no particular effort to set up, and can even be used to conduct tests in the usual way, with Eye Tracking running in the background. It is these points that make webcam Eye Tracking methods so attractive. More traditional eye tracking methods (glasses or infrared bars) are much more expensive and restrictive.

However, the application does have one weakness, and it’s a big one: it’s totally inaccurate. Eye tracking is very approximate, so you can’t use the application for qualitative testing. Worse still, relying on this application could lead you to false insights that could be very damaging for your project.

Eye tracking on consumers device: a false good idea?

The results of this test are frustrating. Despite its apparent goodwill in terms of design, Hawkeye comes up against the same pitfall as other webcam eye-tracking systems. Even with the capabilities of the best cameras on the market, mobile webcam eye tracking is still a long way from achieving the precision of a work tool. By way of comparison, the tests carried out on the desktop with Xlab seemed to me to be much more accurate.

So I don’t know if we’ll ever be able to do proper eye tracking with the front cameras on your smartphone. There’s still a (very) long way to go, but we shouldn’t condemn this type of technology in advance.

The promise of HawkEye is great. As it progresses, this technology could really democratise eye tracking on mobile phones.

Unless…

At the end of this test, one question persists… if the result of the application is so lacking in precision, why did you take the time to develop it and provide it with a quality design?

Most probably because the technology comes from the Hawkeye Access project, also developed by Matt Moss. Unlike the application we have just tested, Hawkeye Access is an application dedicated to smartphone accessibility for people with motor impairments. The eye-tracking cross will be used as a pointer, and a blink of the eye will mean pressing on an area of the screen.

The project has even caught the eye of Apple, which is considering buying the company to integrate it natively into the iPhone. It’s a great project, and one that I’m already more than convinced of, so we can only hope it succeeds!

Originally published in 2019

Thomas